Can’t-Miss Takeaways Of Tips About How To Prevent Web Crawler

Some solutions like this would help (but i need to know how to apply them):

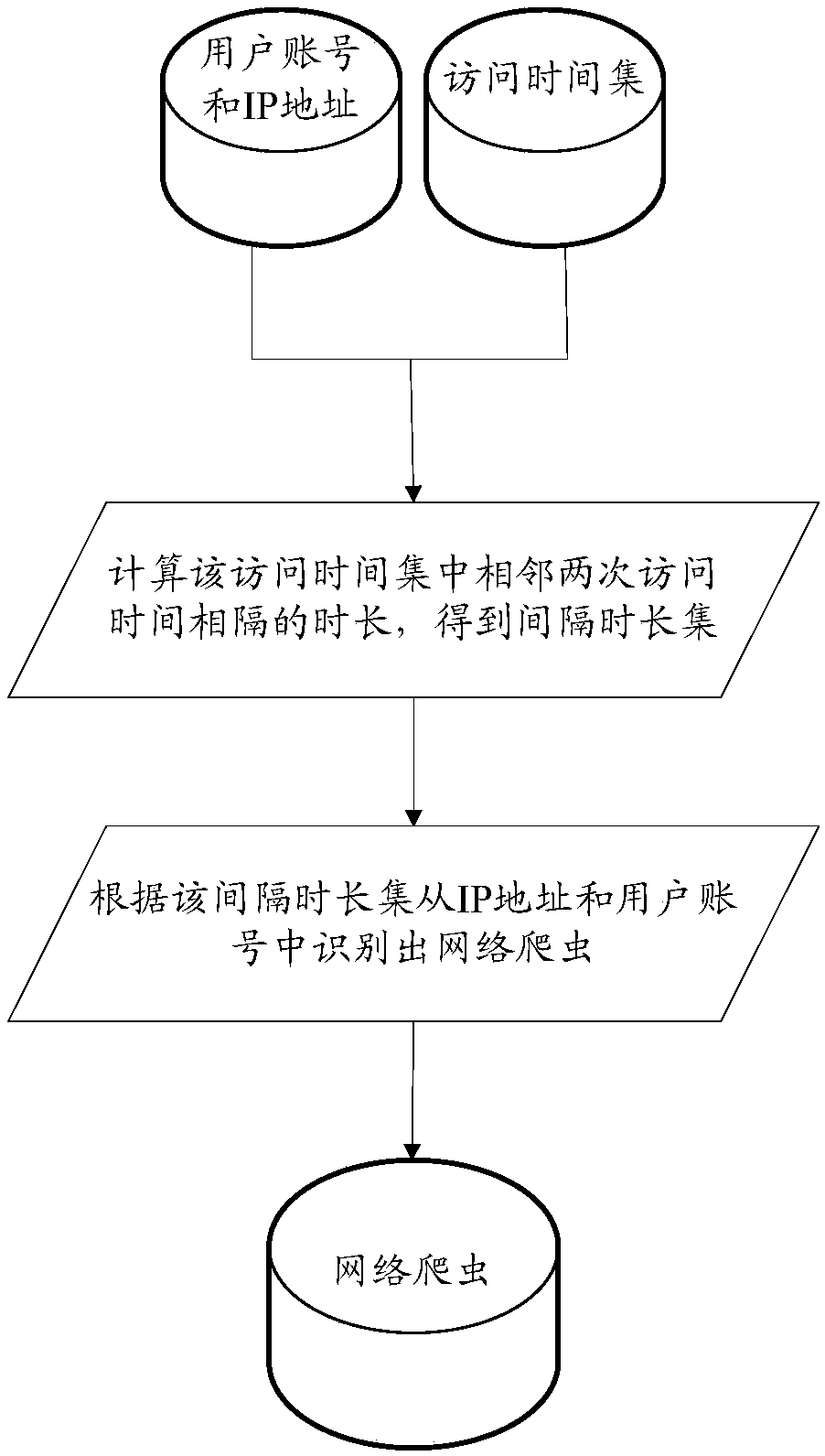

How to prevent web crawler. Also, dive into more advanced and actionable concepts. In order to prevent web crawlers from accessing sections of their websites, companies need to employ the following strategies: There are two ways you can block access to certain web pages:

Envira gallery (anti image theft) envira gallery is the best wordpress gallery plugin on the market that can easily protect your images from theft. Asking search engines not to crawl your wordpress site. Good practice tips and tricks to prevent crawler traps.

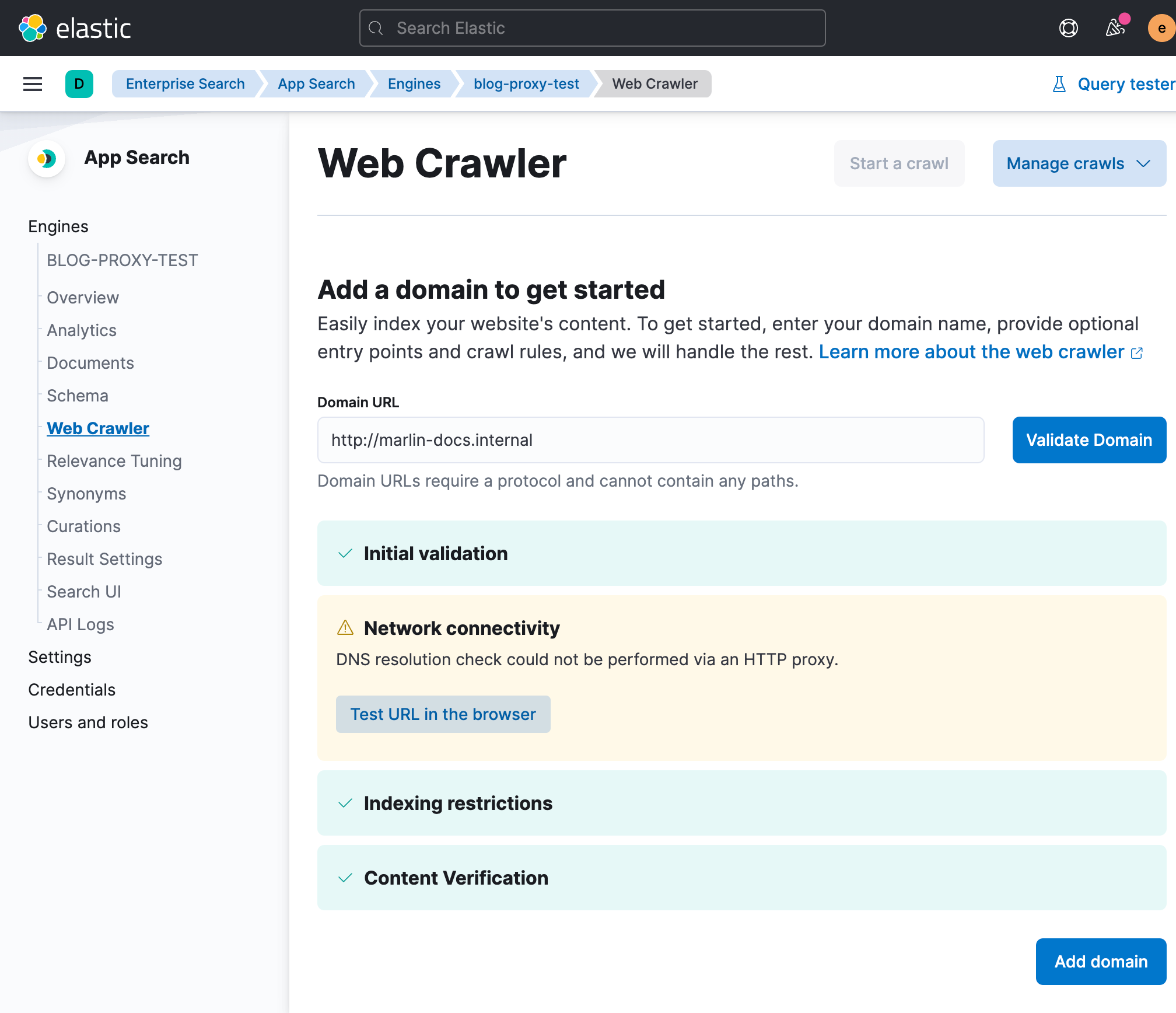

How to use robots.txt to disallow search engines. This tells them which pages they can and cannot crawl. Like most other web crawlers, gptbot can be blocked from accessing your website by modifying the.

By requiring a username and password to access your. Crawler traps usually originate from a technical design flaw. If you want to check your.

How to prevent openai from crawling your website. How to avoid web crawler detection. Adding a “no index” tag to your landing page won’t show your web page in search results.

You can also use code on your web pages to deter bots and crawlers from visiting them. As crawler traps go, prevention is better than cure. If your robots.txt file looks like.

Crawling is essential for every website, large and small alike. You could make users perform a task that is easy for humans and. Knowing the root of your domain or utilizing the robots meta tag.

The only way to stop a site being machine ripped is to make the user prove that they are human. Use the following web scraping protection best practices to tackle scraping attacks and minimize the amount of web scraping that can occur. Search engine spiders will not.

Search engines first look at your robots.txt file. If your content is not being crawled, you have no chance. One way to do this is to use a robots.txt file to tell crawlers which pages to avoid.

To prevent all search engines that support the noindex rule from indexing a page on your site, place the following tag into the section of your page:. While web crawling has significant benefits for users, it can also significantly increase loading on websites,. This is the simplest method but does not fully protect your website from being crawled.